Advanced Recurrent Neural Networks: Long Short Term Memory and Gated Recurrent Units; large scale language modeling, open vocabulary language modelling and morphology.Simple Recurrent Neural Networks: model definition; the backpropagation through time optimisation algorithm; small scale language modelling and text embedding.Introduction/Conclusion: Why neural networks for language and how this course fits into the wider fields of Natural Language Processing, Computational Linguistics, and Machine Learning.This course will cover a subset of the following topics: The course will contain a significant practical component and it will be assumed that participants are proficient programmers. No prior linguistic knowledge will be assumed. Students should have a good knowledge of basic Machine Learning, either from an introductory course or practical experience. This course will make use of a range of basic concepts from Probability, Linear Algebra, and Continuous Mathematics. Be able to implement and evaluate common neural network models for language.Have an awareness of the hardware issues inherent in implementing scalable neural network models for language data.Understand neural implementations of attention mechanisms and sequence embedding models and how these modular components can be combined to build state of the art NLP systems.Be able to derive and implement optimisation algorithms for these models.

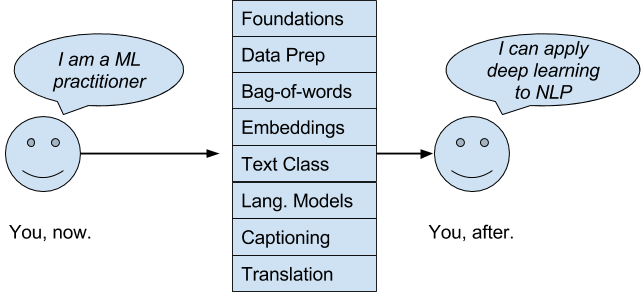

Understand the definition of a range of neural network models;.Chris Dyer (Carnegie Mellon University and DeepMind)Īfter studying this course, students will:.Phil Blunsom (Oxford University and DeepMind).This course will be lead by Phil Blunsom and delivered in partnership with the DeepMind Natural Language Research Group. Throughout the course the practical implementation of such models on CPU and GPU hardware will also be discussed. These topics will be organised into three high level themes forming a progression from understanding the use of neural networks for sequential language modelling, to understanding their use as conditional language models for transduction tasks, and finally to approaches employing these techniques in combination with other mechanisms for advanced applications. The course will cover a range of applications of neural networks in NLP including analysing latent dimensions in text, transcribing speech to text, translating between languages, and answering questions. We will introduce the mathematical definitions of the relevant machine learning models and derive their associated optimisation algorithms. This will be an applied course focussing on recent advances in analysing and generating speech and text using recurrent neural networks. Recently statistical techniques based on neural networks have achieved a number of remarkable successes in natural language processing leading to a great deal of commercial and academic interest in the field The ambiguities and noise inherent in human communication render traditional symbolic AI techniques ineffective for representing and analysing language data. Automatically processing natural language inputs and producing language outputs is a key component of Artificial General Intelligence. This is an advanced course on natural language processing. Deep Learning for Natural Language Processing:

0 kommentar(er)

0 kommentar(er)